Last week in an interview, I was asked how I might approach reviewing and improving an org's Software Development Lifecycle (SDLC).

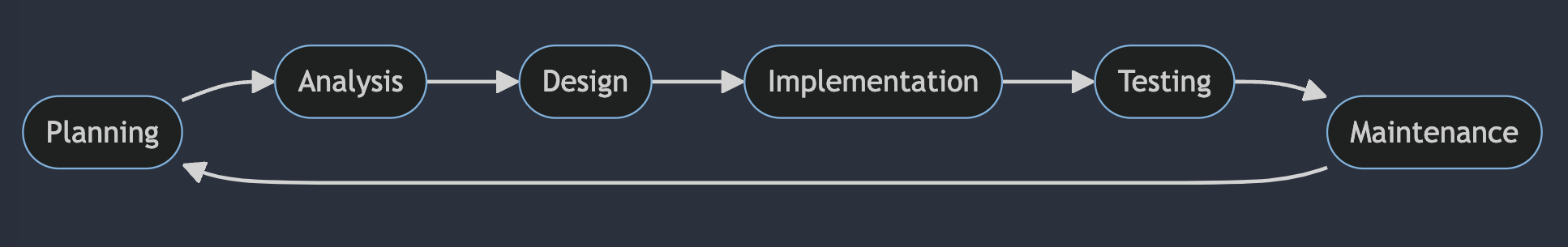

SDLC is often depicted as a perfect circle. Everything always moving forward perfectly, synchronously in a happy, little ♾️ loop. A Merry-Go Round, where every cycle means 📈 and 💸.

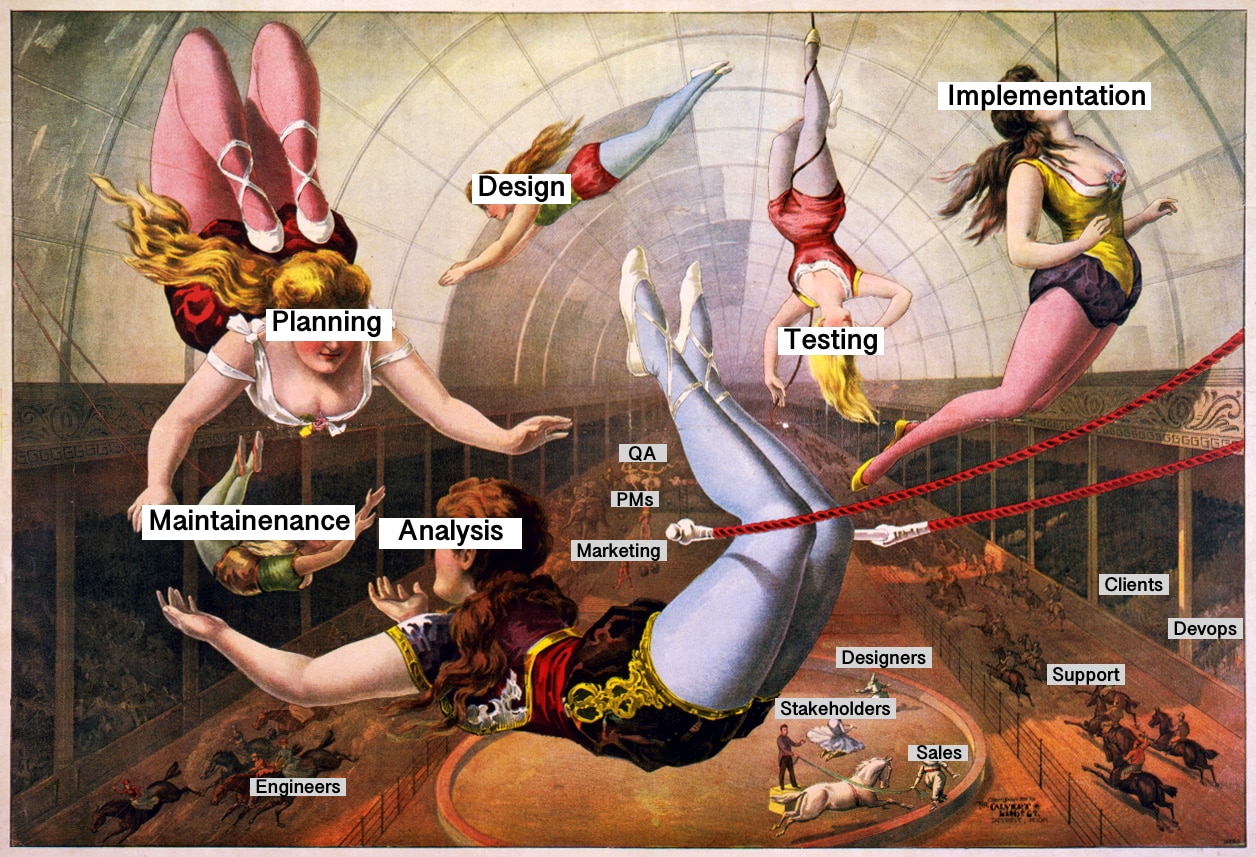

The Phases of the Lifecycle typically don't represent this, but, when working with new or existing projects, we're typically dealing with 7-9 personas, sometimes more. Stakeholders, Product Managers + Product Marketing, Engineers, Designers, QA / Testers, Devops, Support teams, sometimes Sales teams, and more.

It's less of a Merry-Go Round, but rather a Trapeze act. Success relies on the teams' interpersonal and cohesive strengths, trust and the organizational prowess of the ringleader.

There is a great deal of nuance from company to company, from team to team, and even from project to project.

Too Long; Didn't Read

- SDLC processes must be individually tailored toward the specific needs of one's organization, its clients, and the team members operating within those spheres

- Organizations could conceivably have multiple SDLC processes for separate teams that perform better under different guidelines based on their individual needs

- The balance between speed and quality is distilled down into impact, risk, and reward

- Lastly, having

someone who can navigate the chaosand orchestrate it all is vital as the organization grows

The right process is the one where the company and its clients derive the most value with the least overhead, the least risk and all while still staying functional and not going insane.

Layers of processes are added over time to mitigate risk and impact, but too much process can grind the organization's agility to a halt. Retrospectives around processes themselves are vital as orgs scale.

Why Reviewing SDLC Process Is Critical

When the SDLC aren't honed to teams' specific needs, we might expect:

- Stress on employees; Staff retention issues;

Burnout - More bug reports and hotfixes, which adds unnecessary load to Support teams

Inability to operateeffectively (cannot get work done; can't be agile)- Sprint

velocityis degraded (theMaintenancepiece is bleeding into the new sprints due to lack of planning for Maintenance) - Disruption of clients' operations

- Client churn / loss of business

Nomenclature

To talk about how to shape an org's SDLC, let's settle on a few definitions first.

Risk

The likelihood that something will go wrong. This can happen during any Phase of the SDLC step without due diligence.

Impact

If something goes wrong, how widespread is it?

- Does it impact 1 client or every client?

- Is it a broken page due to a JS runtime error or are critical processes disrupted, security vulnerabilities being exploited, or catastrophic data loss?

Reward

When the SDLC is followed, we reap the following benefits:

- Staff satisfaction with the process. Was the stress of the collective teams managed appropriately? Do they feel satisfied with the outcome?

- Are the end-users happy?

- Did this project impact other projects in an unexpected way?

- Are we staying nimble?

- Are our roadmap(s) on track?

Planning

Plan too slowly and you're a Waterfall shop. You've stepped through every contingency, how all other systems are impacted, and you're pretty darn sure how it will be received by the clients, often because you've had them involved as Stakeholders every step of the way.

Plan too quickly and your quality goes down and long-term costs go up. Implementing client asks at face-value without dissecting the ask from their requests and without bringing in SME's (subject matter experts) to decipher the ask vs. what's feasible can lead to catastrophe.

Often, there must be a balance between being "too agile" and "too waterfall", especially as one's product offering grows and becomes more complex.

Considerations:

- What are we trying to achieve?

- What is the problem?

- Who are our stakeholders?

- Which Phases of the Lifecycle will be most critical for this project and why?

- If we're planning changes to existing systems, are there additional Pre-Existing System Change Management requirements to consider?

- Which teams / departments need to be involved for which Phases?

- Which departments / clients do we want to notify? Do we need to provide material before, during, or after deployment of this project?

- Do we want it feature-flagged and why?

- Is this a direct new source of revenue? If yes, what does that mean about how we integrate this project meaningfully into our other systems?

- How much time has passed between receiving the initial asks that led to this project and completing planning (or, alternatively, are stakeholders done highlighting their needs)? If the answer is not much or no, new requirements could funnel in from stakeholders leading to downstream problems with the project.

Analysis

During Analysis, we're trying to understand what resources the business is willing to commit to the project and if it's feasible to implement that project within the defined parameters. This is specifically an area of cost-benefit analysis where many projects are closed as Won't Do if we're doing it right.

Considerations:

- How many resources do we need to dedicate to this and for how long? Are we willing to invest those resources?

- How are other systems impacted by the addition of this system?

- What does the data-model look like? Will that scale appropriately?

- Is this a critical system or an auxiliary system?

- Once implemented, are we willing to spend time iterating on this project until customers are fully happy or is this a single-iteration project?

- What do we plan to do if the launched system is not well-received?

- Are we willing to kill this system if the ongoing costs are going to exceed revenue (as it related to subscription fees or resources to maintain the project)

- Do we need product marketing or support educational content?

- Is this big enough that we need to hire across different departments to support this?

- How many Implementation unknowns are there at this stage? If there are too many, spending some time on RnD during this phase can be critical to project success.

Pitfalls:

- Engineers and SMEs weren't involved in Analysis or this was skipped altogether and there is friction around feasibility.

- Analysis approved too quickly and moved to the Implementation phase resulting in overwhelmed engineers or no resources available for the project. Burnout could occur especially if the implementation schedule was never discussed.

- Inversely, bikeshedding around features and what makes the cut and what doesn't as well as nitty implementation details.

Design

Ideally in the Design phase, the plan, analysis output, and schedule was clearly articulated to designers who can then work alongside PMs and Engineers to ensure that the design is just as feasible as the original plan and analysis.

Considerations:

- Is there a UI Kit and pre-existing patterns we can easily conform to such that we can skip creating new Mockups for this?

- If this system must be built with custom components instead of preexisting ones, why?

- What is the MVP goal and the North-star goal? Should designers implement mockups for both?

- If there are specific implementation deadlines, were those clearly stated so that designers aren't focusing on the north-star goals?

- Is this a critical system and a lot of focus should be put into this project or is this non-critical?

- Do clients / stakeholders need to be involved in the Design phase?

- What is the process for engineers to request iterations on the Design if they run into snags during Implementation?

- Do we need A/B tests or Metrics reporting? If so, on which pieces and how will it be used?

- Once this design goes live, will it be something easy to iterate on or will it be locked-in due to client-familiarity with that system

- What on-screen help and informational text can we provide to end-users to help them understand how it works? Where do they go for more information?

Pitfalls:

- Designs potentially being "too creative" when proper boundaries around the scope weren't put in place

- Inversely, designs lead to a confusing UI/UX because the design looked good on paper, but didn't meet the key needs of the client.

- Hasty design decisions can have long-lasting impacts. Check out The Immovable Sync Now Button for an example.

Implementation

Considerations:

- Do we have the right resources available for this project? Each engineer has their own strengths and weaknesses.

- If it's a team that's working on this project, are there clear delineations for who works on what?

- Do we have all the data we need about the problem or is information still trickling in? If requirements change this far into the pipeline, the solution may need to be rebuilt

- Were all unknowns sussed out during Analysis (specifically around libraries or external integrations)? If not, time estimates for implementation can change impacting downstream projects

- Does this project have enough resources or is there too much already on the leads' plate(s), which could lead to subpar quality? Are they being distracted by items getting injected mid-sprint?

- What does the code review process look like and how can that shape the quality of the work here? Are other engineers pulling down the code and testing the changes to look for cracks in the implement or edge cases?

- If there are problems, is there a clear definition of who is the steward that fixes those problems?

- Is there proper documentation and code comments for new engineers to contribute (or if we need to come back to this project in 6 months will we remember how it works)?

- Did the engineer implement any automated tests to help cut down on back-and-forth with QA and to make future refactoring less risky?

Testing

- Do the project's stories have clear Acceptance Criteria for QA Testers?

- Are we seeking UI/UX feedback at this stage or just whether the system works or not?

- If the system is using feature flags and it's not in a working state, do we have clear guidelines for QA Testers about when they can do a full system regression test before we enable the Feature Flag in production?

- In UAT Testing, are the stakeholders happy with the project's results before this goes to Production

Maintenance

Considerations:

- Who is overseeing the health of this system long-term? Is it the engineer that built it? A supervisor?

- If we receive feedback or bug reports, how critical are those items to get into upcoming (or current in some cases) sprints?

- Who is checking usage around the system and reporting on Metrics?

- Have we released any applicable Product Marketing / Internal / External How-To articles around this project?

- How many support tickets is this project generating month-over-month and can we reduce that down to zero?

Pitfalls:

- No maintenance plan was put in place so a new system rolls out, isn't working to our or our clients' satisfaction, and is immediately not maintained or there aren't resources to maintain it.

- Unwillingness to kill dead projects. Resources diverted from successful projects to keep the gangrenous system on life support.

Conclusion

Returning to the introductory analogy, the SDLC is a rather a complex Trapeze act, where multiple projects are often running through the SDLC in parallel.

With proper planning and communication, the involved teams can stay agile, but as an organization scales and as products grow more complex, SDLC processes will likely need to adapt to the changing landscape.

Lastly, having a ringleader to help all teams navigate and operate cohesively is critical to keeping the SDLC healthy and keeping team members and clients happy as the company grows.